You're probably wrong about how things have changed

Five studies show that people don't know how public opinion has shifted over time

I wrote this as a paper for a scientific journal last year, but getting it published required making it boring. Here’s the version I wanted to write. All the research was done with my collaborator Jason Dana, but these particular words are my own.

Here are a few things we all know are true:

Donald Trump won the presidency in 2016 thanks to a huge increase in anti-immigrant sentiment

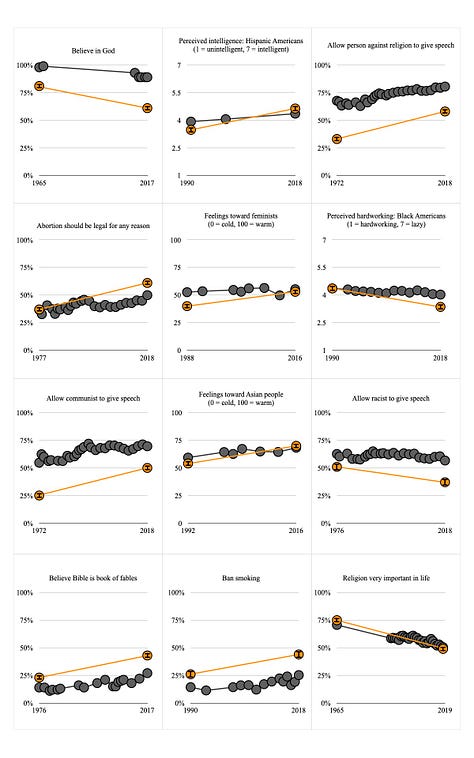

Nobody was worried about climate change in the 1990s, but now lots of people are freaking out about it

Mass shootings have led to a spike in support for gun control

Except...none of that is true.

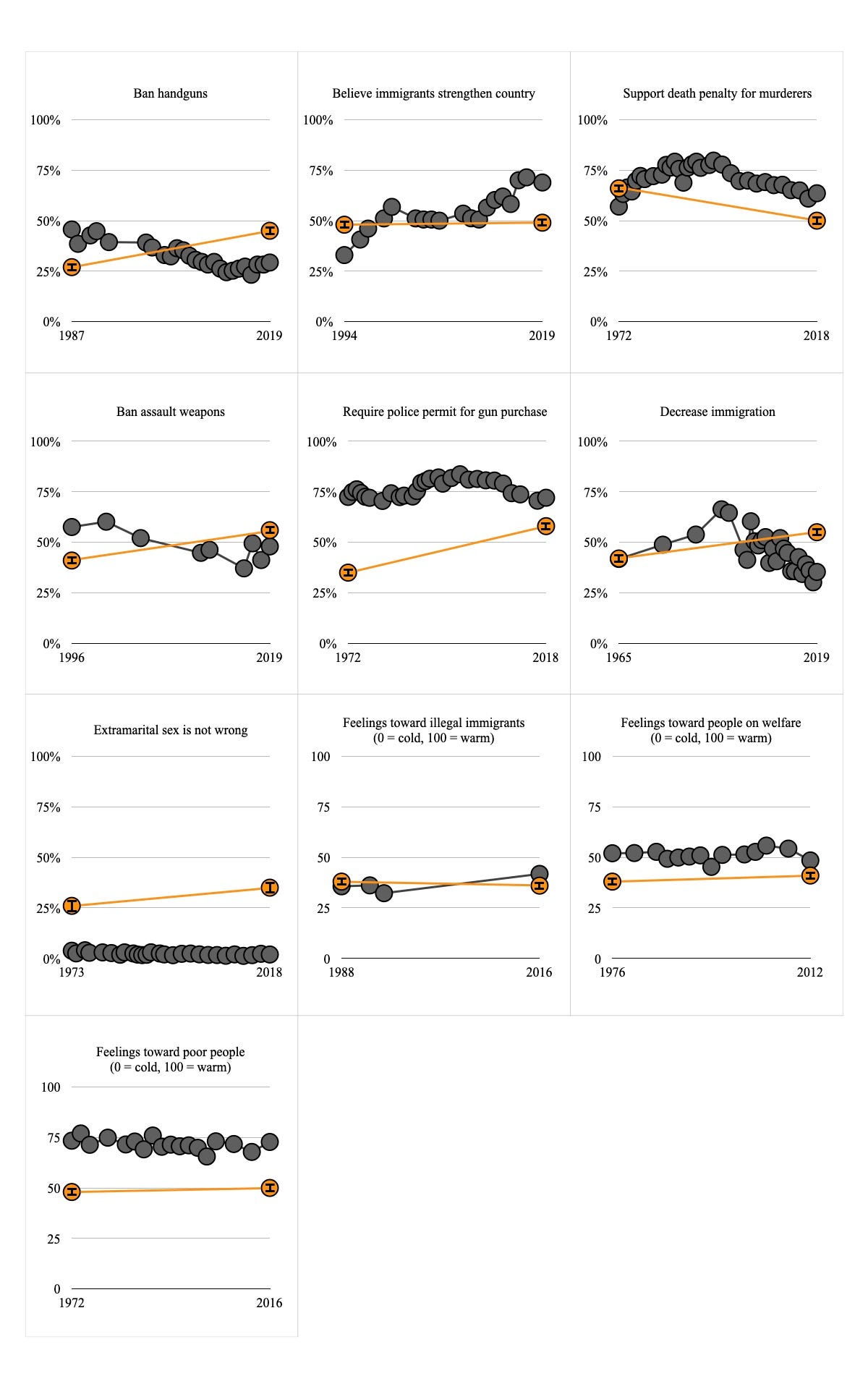

There wasn’t a huge increase in anti-immigrant sentiment around Trump’s election. Attitudes toward immigrants improved during his campaign, and they were at their highest point on record during his presidency.

Support for bans on handguns and assault weapons has decreased since the 1990s.

And most people were already worried about climate change thirty years ago, and that hasn’t changed much over time.

What’s going on here?

Maybe the polls are bad? This seems unlikely. If you run them right, polls seem to do a pretty good job. The fact that you can ask a few thousand people who they’re going to vote for and then predict a presidential election to within a few percentage points is darn near miraculous. There’s always some error, but we’re usually at least in the ballpark.

Maybe I’m just a big dummy and other people wouldn’t be surprised by this data like I was. That’s possible and easy to check—I just need to ask a bunch more people.

Maybe these are weird issues that have changed in surprising ways, but most opinions are more predictable. That’s possible and easy to check, too—I just need a bunch more questions.

If most people don’t understand how public opinion has changed on most issues, that’s a big problem. The world is big and confusing and people often don’t know what they should be doing, so they look around and do what other people do. “Norms,” as psychologists call them, are so powerful that people will say nothing as a room fills with smoke as long as no one else reacts either. Recently, we’ve discovered that changes in norms can be even more powerful. So if people mix up how things are changing, their misperceptions may turn into self-fulfilling prophecies. If you mistakenly think more and more people are dabbling in fascism, for example, maybe you start to do it too.

So I teamed up with my friend Jason Dana to answer three questions:

Do people know how important attitudes have changed over time?

If not, why not?

How might the world be different if people got this right?

You can check out all our data, code, materials, and preregistrations here.

STUDY 0: PICKING TOPICS

We got 150 people on Amazon Mechanical Turk1 to list three social topics on which American public opinion has changed over the past 50 years. I then went through each of these answers by hand, grouped them into categories, and tried to find public opinion data for each of them. I only included surveys that used nationally-representative samples and that asked the same question—or a very similar question—multiple times separated by at least ten years.

I ended up with 51 public opinion questions, which included pretty much every controversial issue in American society (e.g., gun control, immigration, gender, etc.), and a few less controversial issues (e.g., premarital sex, smoking bans, being interested in politics).

STUDY 1: DO PEOPLE KNOW HOW THINGS HAVE CHANGED?

We got a nationally representative sample of 943 people using an online survey platform called Prolific. We showed each person a random sample of 20 out of the 51 public opinion questions and asked them to estimate how people responded at the earliest and latest time point for which we had data. It looked like this:

Then we compared how much people thought opinions had changed to how much opinions had actually changed.

We wouldn’t expect any individual to be great at this, of course. These are pretty hard questions! But if people’s answers are just inaccurate, they should cancel each other out, and the average of their answers should be pretty close to the bullseye—that's how the wisdom of the crowd works. Crowds can be wise even about questions that seem really hard, like “how much does this ox weigh” or “which of these stocks will go up”. So even though “what did people think in 1972 and 2010” might be a hard question, people’s answers should converge on the truth if you get enough of them.

But that’s not what happened here. We averaged everyone’s guesses together and got answers that were, on average, way off. That’s not inaccuracy; that’s bias, and it showed up on 98% (!) of the questions. In fact, more than half the time, people were wrong about whether an opinion had grown from a minority view to a majority view, or vice versa.

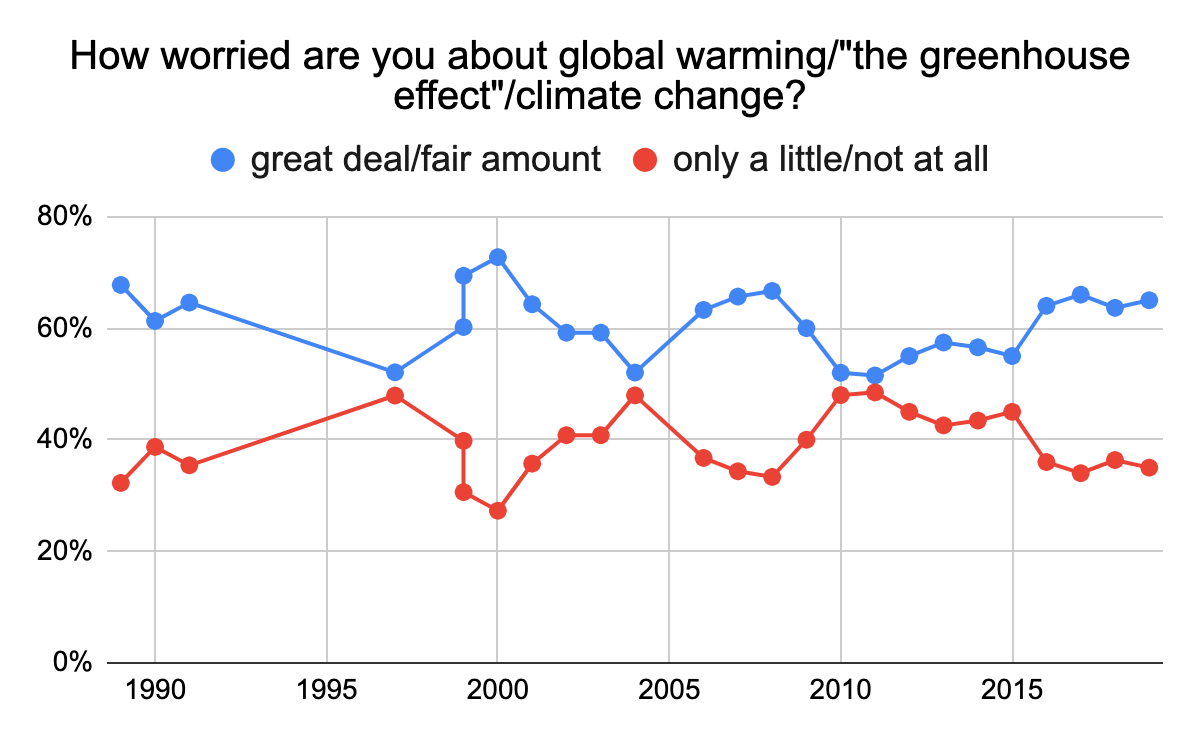

There were three ways people could be biased. They could overestimate how much attitudes had changed, underestimate it, or estimate it in the wrong direction (i.e., people think support for gun control went up, but it actually went down). Of these, overestimation was by far the most common—people thought things had changed a lot more than they really had. This happened on 57% of items.

For example, participants were right that more people say they would vote for a woman for president today than said as much in 1978. But they overestimated that increase, big time:

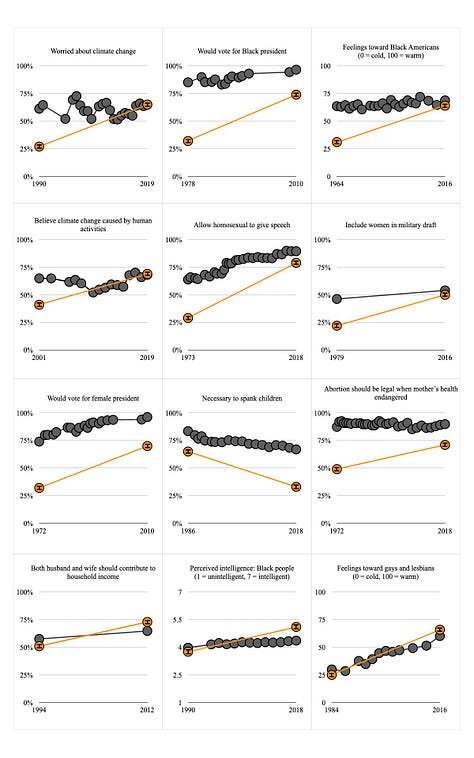

Here are all the items where people overestimated:

On 20% of items, people got the direction of change totally wrong. For example, they thought support for banning handguns had increased since 1987, but it actually decreased. We counted answers as “wrong direction” if people thought there was no change when there actually was, or if they thought there was any change when there actually wasn’t.

Here are all the items where people estimated in the wrong direction:

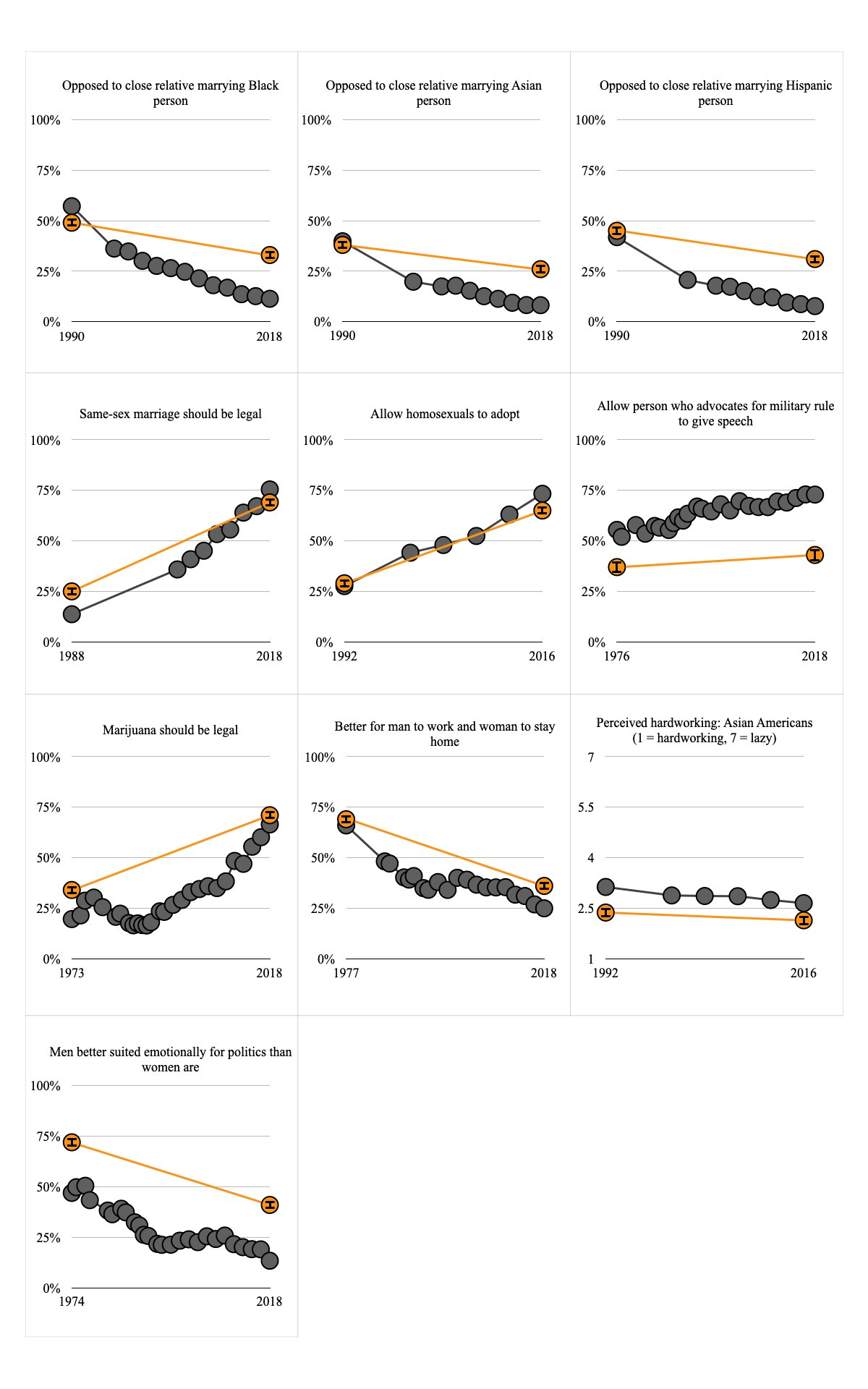

On another 20% of items, people underestimated change. The three big ones here were about interracial marriage. In 1990, 40% to 50% of Americans said they would oppose a close family member marrying someone who was Black, Hispanic, or Asian. (Yikes.) This has since fallen to single digits, but people underestimated that drop.

Here’s every item where people underestimated:

And finally, here are the two items where people got the amount change right, on average:

WHERE DO THESE BIASES COME FROM?

Here are three totally reasonable hypotheses:

Maybe bias comes from a lack of firsthand experience. If you weren’t alive in the 1980s, for example, most of what you know about that decade probably comes from watching Stranger Things. So maybe people are biased when they have to rely on secondhand information. If that’s true, older people should be less biased, especially over longer periods of time.

Another version of the same idea: people should know more about changes in opinions that affect them directly. For example, women should be better than men at estimating changes in gender attitudes, and racial minorities should be better at estimating changes in racial attitudes.

Maybe bias comes from wishful thinking. If so, people who agree with an opinion should overestimate how much it’s increased, and people who disagree should overestimate how much it’s decreased.

I would have bet on all of these. But all three are wrong.

Older and younger people were equally biased, no matter how far into the past we asked them to go.

People’s race and gender had nothing to do with their skill at estimating changes in racial and gender attitudes, respectively.

People who disagreed with the opinion they were estimating were usually a bit less biased than people who agreed with that opinion. But not by much. For instance, folks who believe in climate change overestimated the increase in that belief, but so did people who don’t believe in it.

This is both surprising and illuminating. It means that when people are estimating changes in these attitudes, they’re all probably drawing on similar information. If the gun nuts and the control freaks were living in totally different worlds, they should have had totally different biases. Instead, they had the same biases.

So where are people’s shared biases coming from? When people don’t have good data, they often rely on stereotypes. And there's one stereotype that seems to be pretty consistent with people’s biases, which something like: “the world of the past was super conservative, and the world of today is much more liberal.” And that's probably true, just not to the extent that people think it is. If people are relying on a stereotype like that, it would explain why people overestimate the increase in support for abortion, for example, and why they think support for gun control has gone up when it’s actually gone down.

Testing that hypothesis requires us to know how much people think attitudes have shifted specifically in the liberal direction. We got to the bottom of that in Study 2.

STUDY 2: WHY WERE PEOPLE SO BIASED?

Study 2 was just Study 1 with two extra questions. We again showed participants each public opinion item and asked them to estimate how people answered it. Then we asked them what they could conclude about someone’s politics based on their answer to the question. It looked like this:

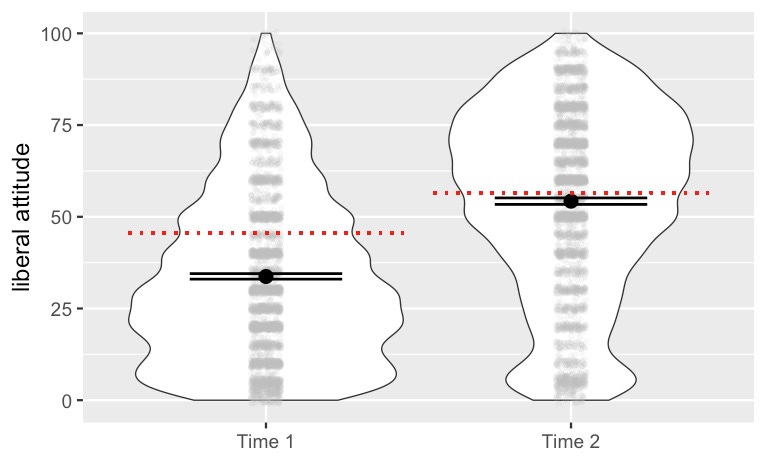

So for each issue, we know a) which side people think is more liberal, and b) how much they think attitudes have shifted toward that side. We figured that people would overestimate change in the liberal direction.

We were right. People overestimated the liberal shift in attitudes, and they overestimated more on items that they thought had more to do with being liberal or conservative. At the same time, they slightly underestimated how liberal attitudes are today. Check it out:

By the way, because we asked Study 1’s questions in Study 2, we could check whether the same biases emerged in both studies. They did. That’s replication, baby. 😎

EXCEPTIONS

“People overestimate the liberal shift in attitudes” fits many items, but not all of them. For example, people thought immigration attitudes had gotten more conservative, and they were wrong. What’s going on there?

Maybe people override their stereotypes when they have some data to go on. For instance, when the “build the wall” dude gets elected president, it seems pretty reasonable to conclude that people feel less positive toward immigrants than they used to. It just turns out that these big, watershed events don’t actually provide a peek into what people really believe.

In fact, other researchers have some data on this. Shortly before the Supreme Court legalized same-sex marriage in 2015, Margaret Tankard and Elizabeth Levy Paluck asked Americans how much they supported same-sex marriage, and how much they thought other people supported it. After the decision came down, Tankard and Paluck asked the same questions again. People’s support for same-sex marriage didn’t change, but they thought that other people now supported it more. Even though the Supreme Court is famously unelected and unaccountable, people seemed to assume that Obergefell v. Hodges said something about the will of the people.

The idea that the state of the world reflects the preferences of the people in it is both a) totally reasonable and b) often wrong. For instance, you might assume that most Americans think it should be illegal to smoke weed for fun, because it’s illegal at the federal level and in most states. In fact, a majority of Americans have supported legalization for 10 years. So why isn’t weed legal across the country? Maybe it’s because Boomers run the government, and older folks are more likely to say weed is bad. Maybe politicians don’t make it a priority because the places that want legalization the most already have it. Maybe people don’t realize how popular it’s become. Whatever the reason, you’d be wrong to look around at the weird hodgepodge of weed laws and assume this is what most people want. In fact, you’d be wrong to look around at the weird hodgepodge of the world itself and assume this is what most people want.

STUDIES 3A-3C: THE TRUTH SHALL SET YOU FREE, HYPOTHETICALLY

Okay, so people don’t know how opinions have changed in America. What would happen if people knew the truth?

You might think this is just a matter of correcting people’s misperceptions by showing them the data. We tried that in a bunch of different pilot studies and it didn’t do anything, mainly because people didn’t believe us. That’s not surprising. People on these online study platforms get lied to all the time. If you’re pretty sure that people are more in favor of gun control than they used to be, and I show you a graph that says the opposite, are you going to believe me? Or are you going to assume that I’m lying to you, or that pollsters did a bad job, or that people aren’t answering the questions truthfully?

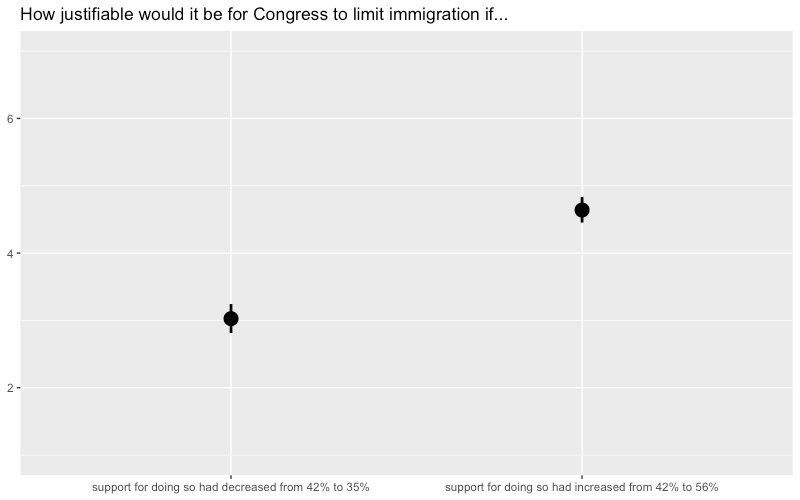

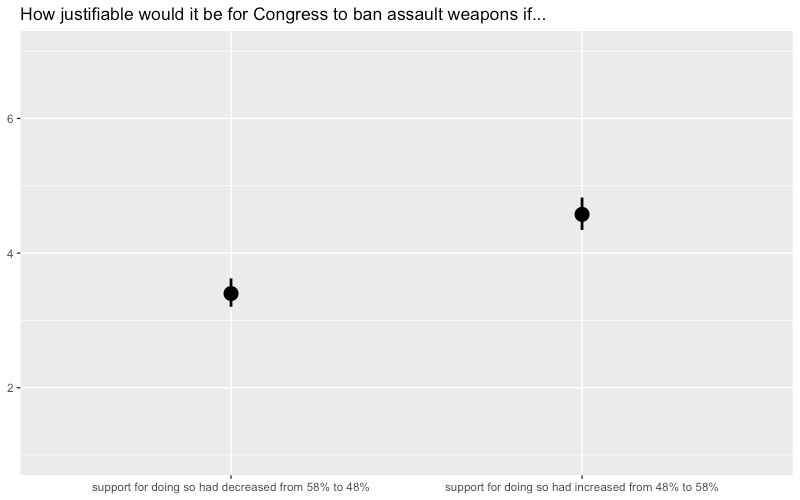

So we tried something else. Instead of showing people the right answers, we asked them to merely consider the right answers. Like, “hey wouldn’t it be crazy if opinions had changed like this? I mean lol I know crazy right but like what if they did?” Then we asked them how justifiable it would be for Congress to pass some law related to that opinion. It looked like this:

On every issue, people were happy to tell us that it’s justifiable for Congress to go along with public opinion. In Study 3a, people said that it's far more justifiable for Congress to limit immigration if support for doing so has risen from 42% to 56% (which is roughly what people in Study 1 thought had happened), than if it had fallen from 42% to 35% (which is what actually happened):

In Study 3b, people said it’s far more justifiable for Congress to ban assault weapons if support for doing so had increased from 48% to 58% (which is roughly what people in Study 1 thought had happened), than if it had decreased from 58% to 48% (which is what actually happened).

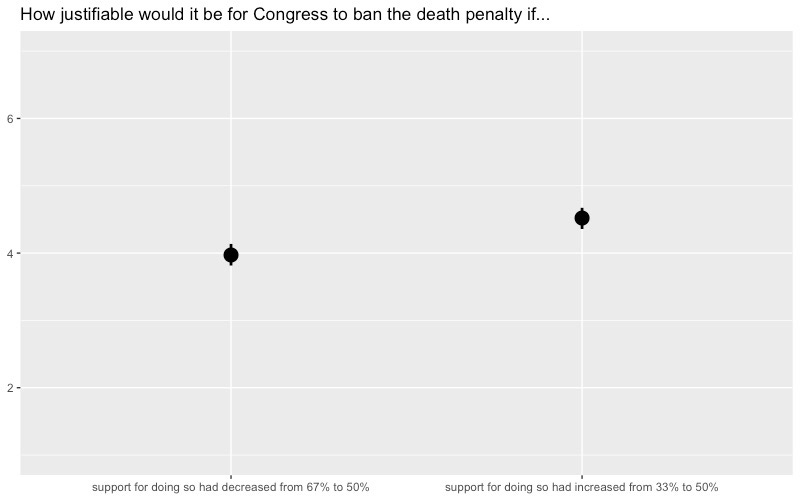

And in Study 3c, people thought it was more justifiable for Congress to ban the death penalty when support for doing so had risen from 33% to 50% (roughly what people in Study 1 thought had happened) than when it had fallen from 67% to 50% (the opposite of what participants thought had happened). This was an important comparison because opinions were split in both cases—the only difference was whether support was rising or falling. (The actual change was from 64% to 57%.)

This pattern held regardless of whether people supported or opposed each position. Which is neat! People were willing to say “look, I’m not for it, but if that’s what everybody else wants, I guess it’s fine.” This seems like an important attitude to have around in a democracy.

So if people understood how attitudes have really changed, would they support different policies, and would Congress pass different laws? Hey, maybe! I’m sure plenty of people wouldn’t change their minds no matter what, and some things are right or wrong no matter how many people favor them. (I, personally, don’t think the death penalty is justifiable even when it’s popular.) But maybe there’s a bit of hope peeking through here, that people are willing to entertain, even hypothetically, that the government should reflect the will of the people. Perhaps things would be pretty different if people knew what that will was, and how it has changed.

CONCLUSION/DISCUSSION/THE SLEDGEHAMMER AND THE VASE

We make sense of the world by telling stories about it. “We used to have racism, then we did a Civil Rights Movement; all good now.” “We used to have sexism, then we did a feminist movement; all good now.” “We used to believe in God, then we did an atheist revolution; all good/bad now, depending on your feelings about God.”

These stories are often wrong. Sometimes they simply didn’t happen; sometimes they kind of happened. They’re almost always oversimplifications and caricatures, which is usually fine, but it’s less fine when we’re deciding whether people should be able to own an AR-15.

Where do these stories come from? Probably from all over the place: half-remembered lessons from ninth grade history, a story your grandpa told you once, episodes of Mad Men. All those scraps get pasted together in our heads, and we round off the edges to make everything fit together neatly. The result is this strange conviction that we see the past through a telescope, when we’re actually looking into a kaleidoscope, hallucinating entire scenes out of a few refracted images.

When you get these stories wrong, you'll misunderstand how the world works and how to change it. If you think that we’ll wise up about climate change once we’ve lived through enough droughts and hurricanes, or that we’ll wise up about gun control once we’ve lived through enough mass shootings—well, you’re gonna be pretty disappointed once you see the data. Activists have fought for decades to convince people that abortion is acceptable; their efforts barely moved the needle at all. Apparently the only thing that actually makes people support abortion is the Supreme Court taking it away.

(That shouldn't be surprising, because the same thing happened with the death penalty. Support for executing murderers spiked after the Supreme Court briefly banned the death penalty in 1972. They reinstated it in 1976, but public opinion still hasn’t fallen to where it was before.)

If you don’t want these biases in your head, what should you do? You could spend lots of time scrolling through Gallup polls, I suppose. It would certainly be more informative than checking your stocks or reading the news.

But I think the better solution is to crank down your convictions. It’s easy to feel like you know what things were like back then and what they’re like now, but it’s hard to actually know. You can’t get a good sense of the past or the present by skimming the headlines, just like you can’t get a good sense of America by spending a 4-hour layover at the Reno airport. You have to rent a car and drive around, talk to people, eat a burrito, fall in love, watch some bad standup, host Thanksgiving, go camping, get your heart broken, take the Staten Island Ferry past the Statue of Liberty, play intramural basketball, and move apartments four times in three years—and even then all you really know is that you don’t know much.

And that’s just what it takes to know the present. Knowing the past is much harder. History is an endless display of priceless vases and time is a dude with a sledgehammer smashing each one in turn: once a moment is gone, it’s gone for good, and all we can do is try to piece together what it used to look like. Maybe this piece went with that one? Maybe it’s a picture of a—is that a lion, or is that a person? Meanwhile, the pile of shards grows taller.

These studies show that, when we try to glue the vases back together, we don’t just mix up some pieces randomly. We consistently put some of them in the wrong place. One of the big mistakes we make is failing to realize how liberal attitudes already were in the past, and how little they’ve changed since then. Americans were already split on abortion in the 1970s, they’ve long thought that people with unpopular opinions should have the right to free speech, and most of them have believed for decades that humans are causing climate change. A majority of them also thought interracial marriage was bad as recently as 1990. The past is complicated, man.

So next time you’ve got an inkling that things ain’t like they used to be, consider the possibility that you might be totally wrong. Man, people used to be so much better at this!

People often have concerns about running psychology studies on the internet, and for good reason: you’ll get junk responses if you’re not careful. I do eight things to prevent that.

I use a platform called CloudResearch that prescreens participants with basic quality control checks.

I only let people take my studies if they pass a three-item test of English language and American culture (when I’m studying Americans). For instance: “Which if this is NOT associated with Halloween?” and you have to pick “eating turkey.”

I ask people for their birth year at the beginning of the study and I ask them for their age at the end.

I include various manipulation checks and attention checks in the survey. For instance, I sometimes ask people to recall the last answer that they gave.

I always embed an attention check at the end of the survey.

My studies are always either a) very short, or b) at least mildly interesting.

I pay 50-100% higher than average.

I keep a list of past offenders that I’ve kicked out of surveys for one reason or another, and I don’t let them sign up again.

The people who fail the checks tend to respond randomly and fill out open-ended text boxes with gobbledygook. The people who pass the checks generally give answers that make sense. There are always a few trolls I don’t catch, but otherwise I feel pretty good about the quality of data that I get. If you ever see someone presenting data they collected online without tons of quality assurance, you should assume that their data includes a bunch of junk responses.

One thing that seems relevant is that a certain segment of the population believes that history has a 'preferred direction' or purpose ... there is a natural law of history, that means that we are all progressing, as we are supposed to, to the utopian future. If this teleological lens is how you see history then people in the past by definition must have less enlightened attitudes that we do in the present. The reverse of this, popular other places, is that rather than 'progressing' we have embarked on a path of terminal decline. People who believe this believe that those in the past must have had more enlightened attitudes than we do now. Sometimes the people on both sides believe exactly the same things about attitudes in the past -- they only disagree over what constitutes progress vs decline.

The teleological view is rather dangerous, from a governing point of view. It means that people are not free to propose a way to deal with a problem, try it, discover that it didn't work as imagined, conclude it was a bad idea and decide to not do this any more. Once you get started with a project, there is relentless pressure to double down on the measure when it first shows signs of not working out after all. Progress appears to not have a reverse gear. But can explicitly 'progress-neutral' or 'progress-irrelevant' measures grow sufficient popular backing?

This critique is based purely on my squinting at the graphs not on any number crunching, but I was a less impressed by the under/over estimate charts than the strength of the conclusions here seem to imply. Many of them I look at and think that people got it basically right, or that the delta was pretty close but the absolute was off.

I’m saying that the standard for being right/unbiased is too exacting, and therefore saying “98% (!) of the questions” is a bit misleading. I would be very surprised if they all matched as closely as the ones that did match, and suspect those matches are more chance.

This makes me think the stronger claims of bias are exaggerated -- maybe we don’t need this much of an explanation! But the parts where we dig in to the ones we’re people are very very wrong are quite interesting.