Both Democrats and Republicans can pass the Ideological Turing Test

N = 1,648

This is joint work with Jason Dana and Kris Nichols. You can download a PDF version of this paper on PsyArxiv.

I dunno if you’ve heard, but Democrats and Republicans do not like each other. 83% of Democrats have an unfavorable view of Republicans, and Republicans return the lack of favor in similar numbers. Republicans think Democrats are immoral, Democrats think Republicans are dishonest, and a majority of both parties describes the other party as “brainwashed,” “hateful,” and “racist.” These numbers have only grown in recent decades.

(One particularly evocative statistic: only an estimated 10% of marriages cross party lines.)

But here’s something funny—according to a bunch of recent research, Democrats and Republicans don’t seem to know who they’re hating. For example, Democrats underestimate the number of Republicans who think that sexism exists and that immigration can be good. In return, Republicans overestimate how many Democrats think that the US should have open borders and adopt socialism. Both parties think they’re more polarized than they actually are. And majority of both sides basically say, “I love democracy, I think it’s great,” and then they also say, “The other party does NOT love democracy, they think it’s bad.”

Maybe these parties hate each other because they misperceive each other? While Democrats and Republicans dislike and dehumanize each other, each side actually overestimates the other side’s hate, and those exaggerated meta-perceptions (“what I think that you think about me”) predict how much they want to do nasty undemocratic things, like gerrymander congressional districts in their party’s favor or shut down the other side’s favorite news channel. When you show people what their political opponents are really like, they see the other side as “less obstructionist,” they like the other side more, and they report being “more hopeful.”

It would be a heartwarming story if it turns out all of our political differences were one big misunderstanding. That story is, no doubt, at least a little true.

But there are two things that stick in my craw about all these misperception studies. First, we know that participants sometimes respond expressively—that is, when Democrats in psychology studies say things like, “Yes, I believe the average Republican would drone-strike a bus full of puppies if they had the chance,” what they really mean is “I don’t like Republicans.” It’s hard to separate legit misperceptions from people airing their grievances.

Second, it’s not clear whether we’ve given people a fair test of how well they “perceive” the other side. So far, researchers have just kinda picked some questions they thought would be interesting, and “interesting” probably means—consciously or subconsciously—“questions where we’re likely to find some big honkin’ misperceptions.” Someone with the opposite bias could almost certainly write just as many papers about accurate cross-party perceptions. There are infinite questions we can ask and there’s no way of randomly sampling from them.

To start untangling this mess, maybe we need to leave the lab and go visit Colorado Springs in 1978.

THE IDEOLOGICAL TURING TEST

That was where a Black police officer named Ron Stallworth posed as an aspiring White supremacist, befriended some Ku Klux Klan members over the phone, and convinced them to let him join their club. (His White partner played the part in person.) At one point, Stallworth got David Duke, the Grand Wizard himself, to expedite his application. By the end of Stallworth’s investigation, the local chapter of the KKK was trying to put him in charge.

(If that story sounds familiar, it’s because it was made into the 2018 Oscar-winning movie BlacKkKlansman.)

Stallworth passed a pretty high-stakes test of his knowledge of Klan psychology, which the economist Bryan Caplan calls the “Ideological Turing Test”—if I can pretend to be on your side, and you can’t tell I’m pretending, then I probably understand you pretty well. In the original Turing Test, people try to tell the difference between a human and a computer. In the Ideological Turing Test, people try to tell the difference between friend and foe.

We thought this would be a useful way of investigating misperceptions between Republicans and Democrats. We first challenged each side to pretend to be the other side, and then we had both sides try to distinguish between the truth-tellers and the fakers. If partisans have no idea who the other side is or what they believe, it should be hard for people to do a convincing impression of the opposite party. So let’s see!

You can access all the materials, data, and code here.1

PART I: WRITERS

We got 902 participants on Amazon Mechanical Turk, roughly split between Democrats and Republicans. (Sorry, Independents: to take the study, you had to identify with one side or the other.)

We asked participants to write a statement of at least 100 words based on one of two prompts, either “I’m a REPUBLICAN because…” or “I’m a DEMOCRAT because…”. Let’s call these folks Writers. The prompts were randomly assigned, so half of Writers were told to tell the truth, and half of people were told to lie.

Writers knew that other participants—let’s call them Readers—would later read their statements and guess whether each Writer was telling the truth or lying. We offered Writers a bonus if they could convince a majority of readers that their statement was true.

(This was pre-ChatGPT, when it wasn’t so easy to whip up some human-sounding text on demand.)

We tossed out a few statements that were either a) totally unintelligible, b) obviously copy/pasted from elsewhere on the internet, or c) responding to the wrong prompt. But otherwise, we kept them all in.2

PART II: READERS

We got another group of 746 Democrats and Republicans, and we explained the first half of the study to them. Then we showed them 16 statements from Part I, which were a mix of real/fake and Democrat/Republican. We asked them to guess whether each one was REAL or FAKE, and we paid them a bonus for getting more right, up to $4.

TIME OUT: DO YOU WANNA TRY THIS FOR YOURSELF?

Before you see the results, you can take the Ideological Turing Test for a spin! A software engineer named vanntile generously volunteered to turn this study into a slick web app: ituringtest.com. You’ll see 10 randomly-selected statements and judge whether each one is real or fake; it takes about three minutes.

(Huge thanks to vanntile for building this, like an angel that came down from Computer Heaven. If you have any interesting projects in software engineering or cybersecurity in Europe, check him out.)

RESULTS

First, let’s look at the most important trials: Democrats reading real/fake Democrat statements, and Republicans reading real/fake Republican statements. Could people tell the difference between an ally and a pretender?

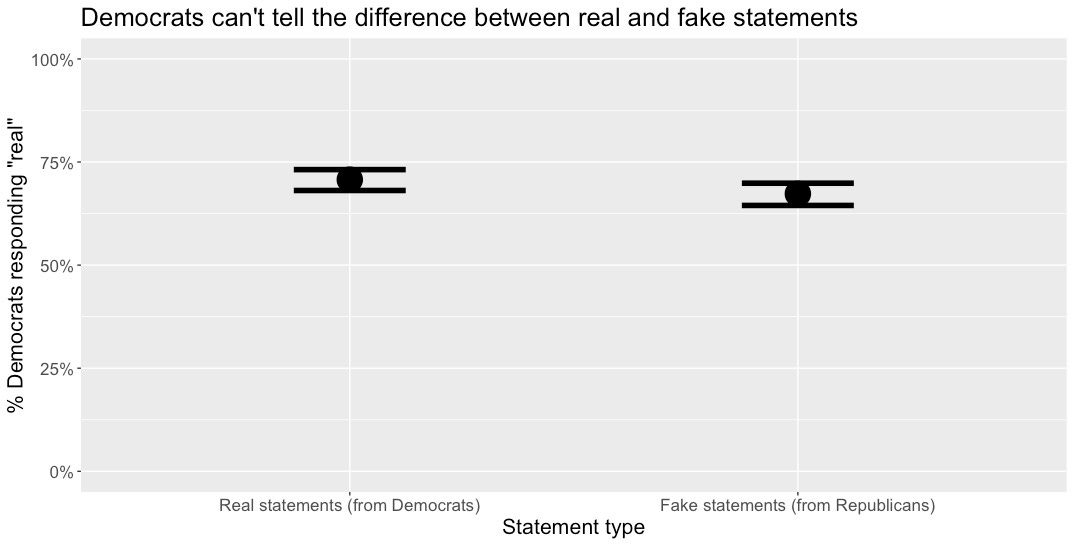

For Democrats, the answer is no:

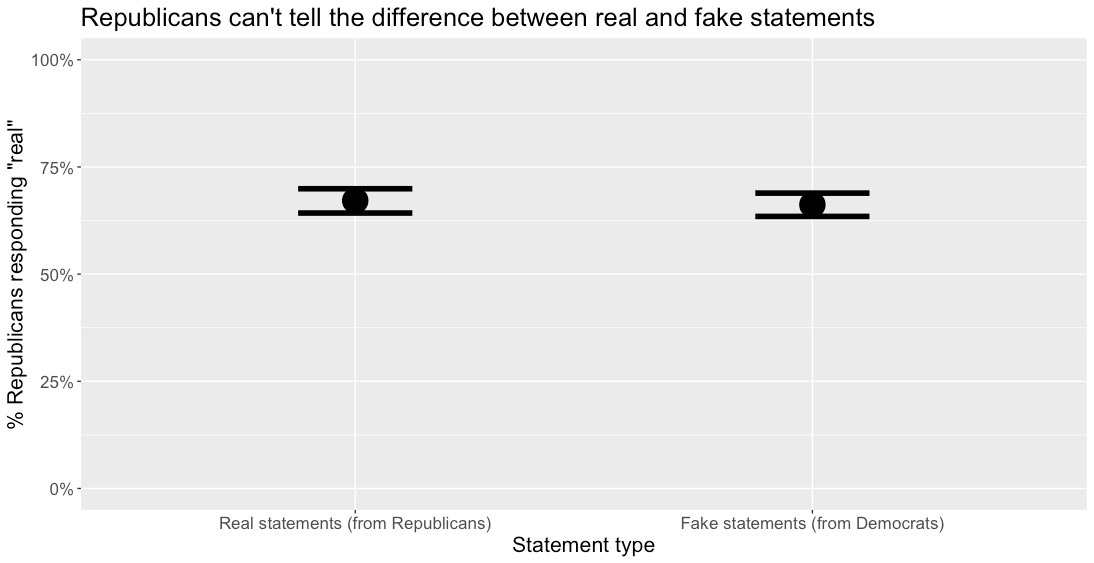

For Republicans, the answer is also no:

Fake Democrats and fake Republicans were as convincing as real Democrats and real Republicans.

That means Writers did a good job! When Democrats were pretending to be Republicans, they could have written stuff like, “I’m a Republican because I believe every toddler should have an Uzi.” And when Republicans were pretending to be Democrats, they could have written stuff like, “I’m a Democrat because I’m a witch and I want to cast a spell that turns everyone gay.” They didn’t do that. They wrote statements that looked as legit as statements from people talking about their actual beliefs. So: both Democrats and Republicans successfully passed the Ideological Turing Test.

That’s already surprising, but it gets weirder.

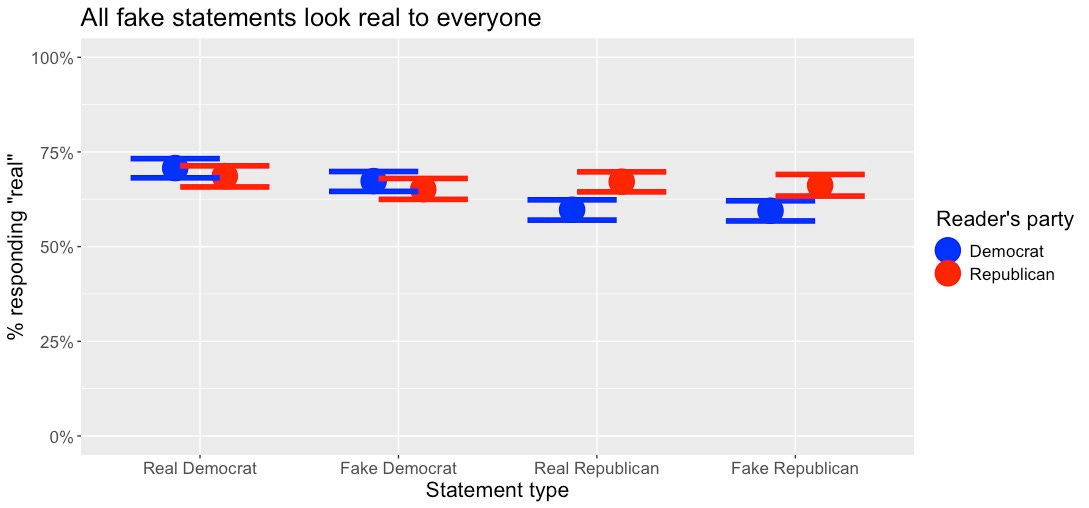

Every participant, regardless of their own party, saw a random mix of all four kinds of statements (real/fake and Democrat/Republican). Here’s a no-brainer: Republicans should be better at picking out real/fake Republicans than they are at picking out real/fake Democrats, right? And Democrats should be better at picking out real/fake Democrats than they are at picking out real/fake Republicans. After all, you should know more about your own side.

Except…that didn’t happen. This next graph gets a little more complicated, so I’ll preface it with the three things that jump out at me:

Neither side did a good job discriminating between real and fake, no matter which party the statement claimed to come from.

Republicans said “REAL” at pretty much the same rate to all four kinds of statements.

Democrats were more likely to flag all Republican statements as fake, whether those statements were actually fake or not.

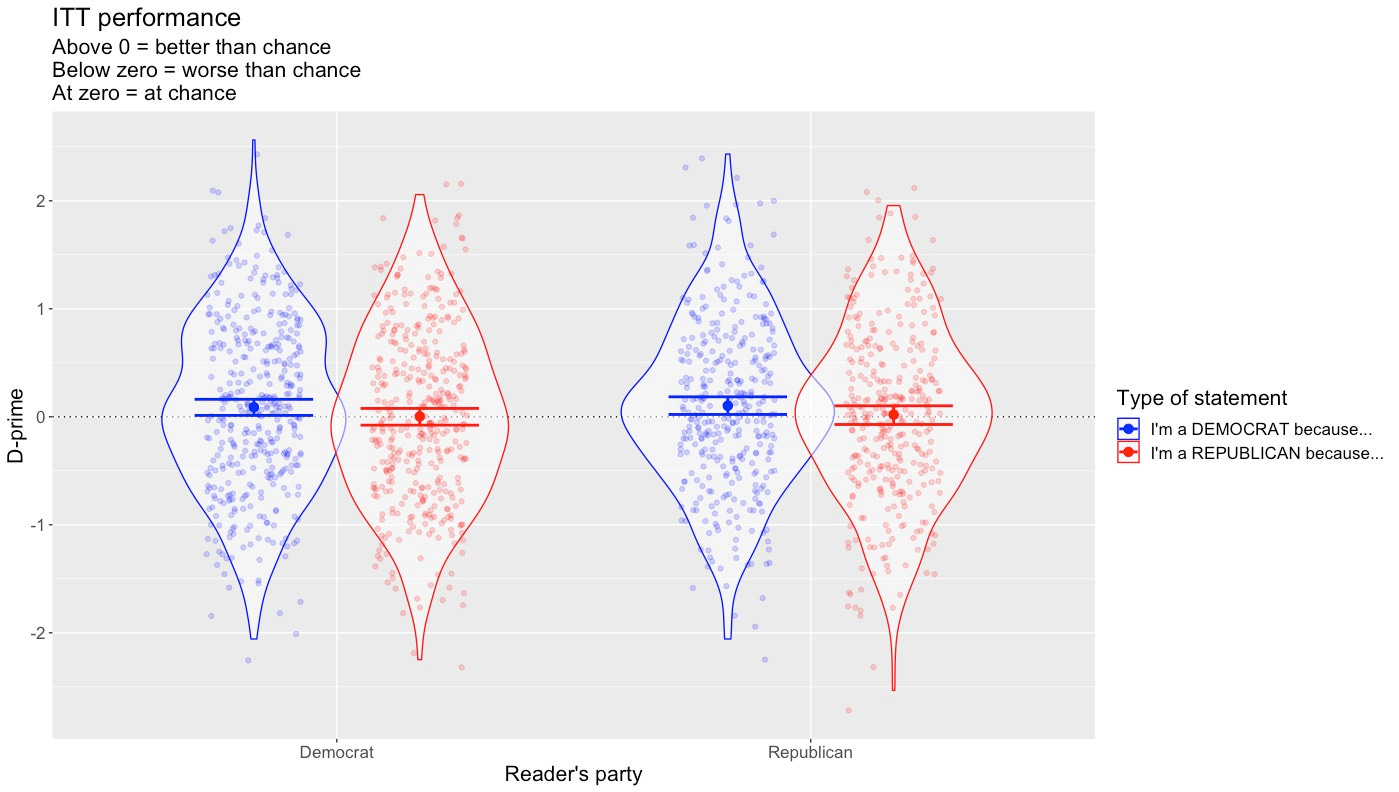

RESULTS, BUT FOR NERDS

To zoom in on how well Readers did, we can use a measure from signal detection theory called d-prime. All you need to know about d-prime is that zero means you’re at chance (you could have done just as well by flipping a coin), above zero means you’re better than chance, and below zero means you’re worse than chance.

Readers from both parties performed basically at chance, regardless of the kind of statements they were reading:

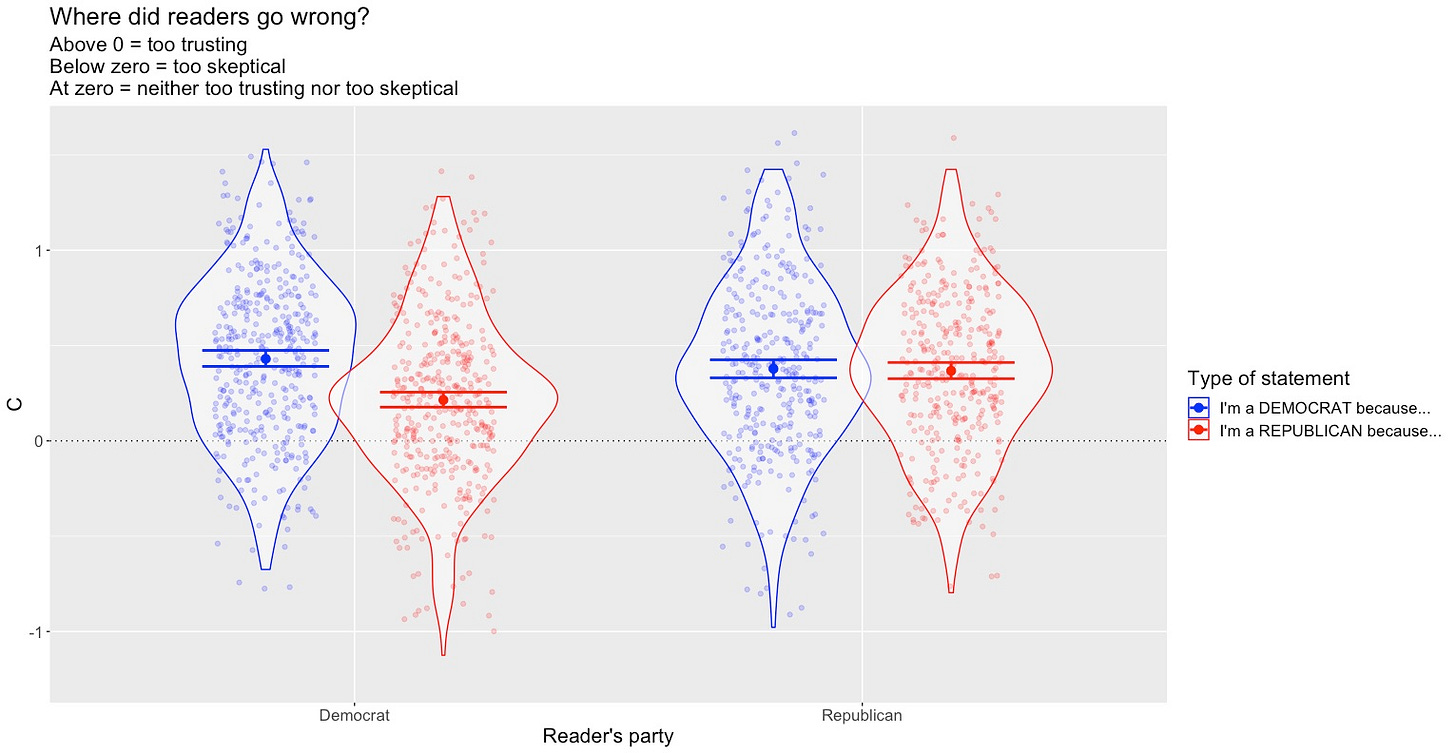

There are a couple ways to end up performing at chance. You could say “REAL!” to every statement, or you could say “FAKE!” to every statement, or you could respond randomly. We want to know which one Readers were doing, and signal detection theory has another measure that can help with that: “c”. On the “c” scale, scores above zero mean participants said “REAL!” too often. Scores below zero would mean participants said “FAKE!” too often.

Participants ended up performing at chance in large part because they said “REAL!” too often:

One weird thing here: Democrats trust Democrat-claiming statements more than they trust Republican-claiming statements. But Republicans trust both kinds of statements equally. I’m not sure what to make of that, especially because Democrats still trust Republican-claiming statements more than they should.

CONFIDENCE

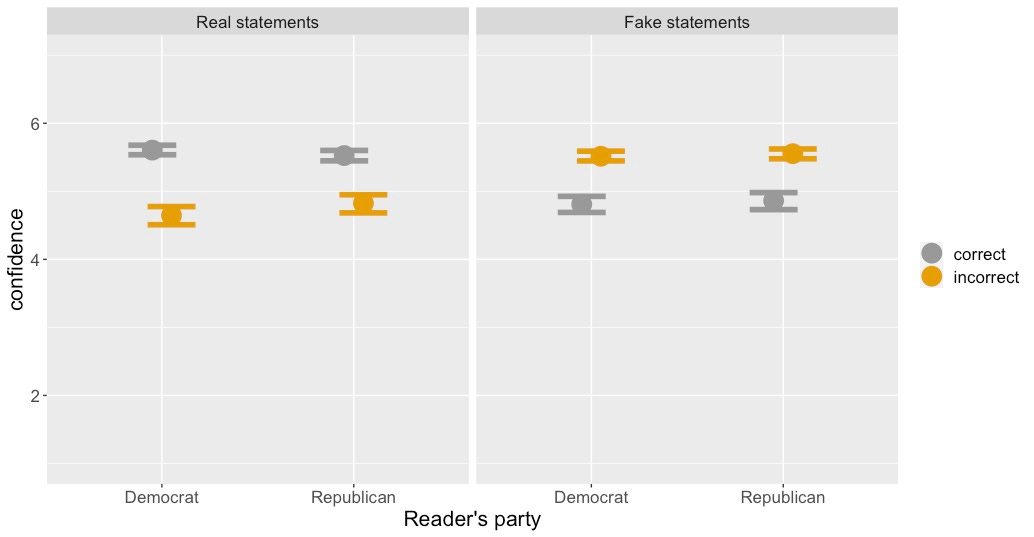

We asked Readers how confident they were about each of their guesses. Overall, confidence was not related to accuracy.

On the graph below, I’m only including the critical trials—Democrats reading statements that claim to be from Democrats, and Republicans reading statements that claim to be from Republicans. This graph is pretty confusing until you understand the pattern: people felt more confident when they thought a statement was real. So people had high confidence on real statements that they got right and on fake statements they got wrong.

BUT HOW DEMOCRAT/REPUBLICAN DO THE STATEMENTS LOOK?

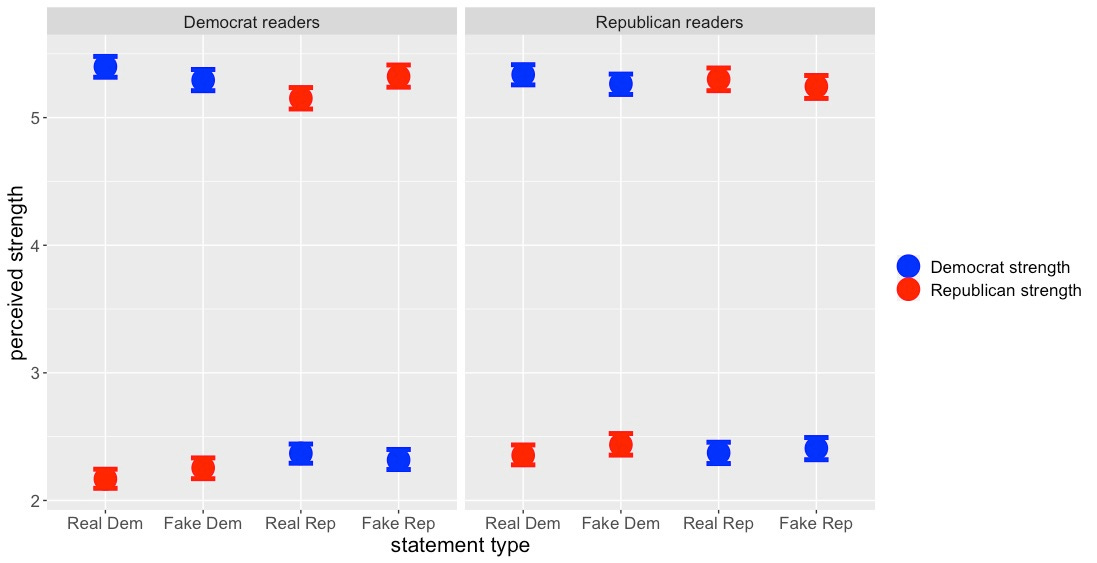

After Readers guessed whether each statement was real or fake, we also asked them, “How strongly do you think the writer of this statement identifies with the Democratic party?” and “How strongly do you think the writer of this statement identifies with the Republican party?” You could think of this as a more sensitive measure than a simple stab at real/fake. For instance, if you think this is a real Republican statement, just how Republican is the person who wrote it?

Using these ratings, we can see that fake statements seem just as partisan as real statements. For instance, Readers thought fake Republicans and real Republicans sounded equally Republican:

This suggests our fake Writers were doing something pretty similar to what the real Writers were doing. Fakers could have easily phoned in their statements, maybe because it was difficult for them even to type words that they didn’t believe. For instance, Republicans pretending to be Democrats could have said something like, “I’m a Democrat, but I’m a moderate one! Almost a Republican, really...”. Or they could have gone overboard: “I’m the rootin’est tootin’est Democrat you ever did see!” On average, they didn’t do either of those things. They wrote statements that sounded just as Democrat as statements from real Democrats.

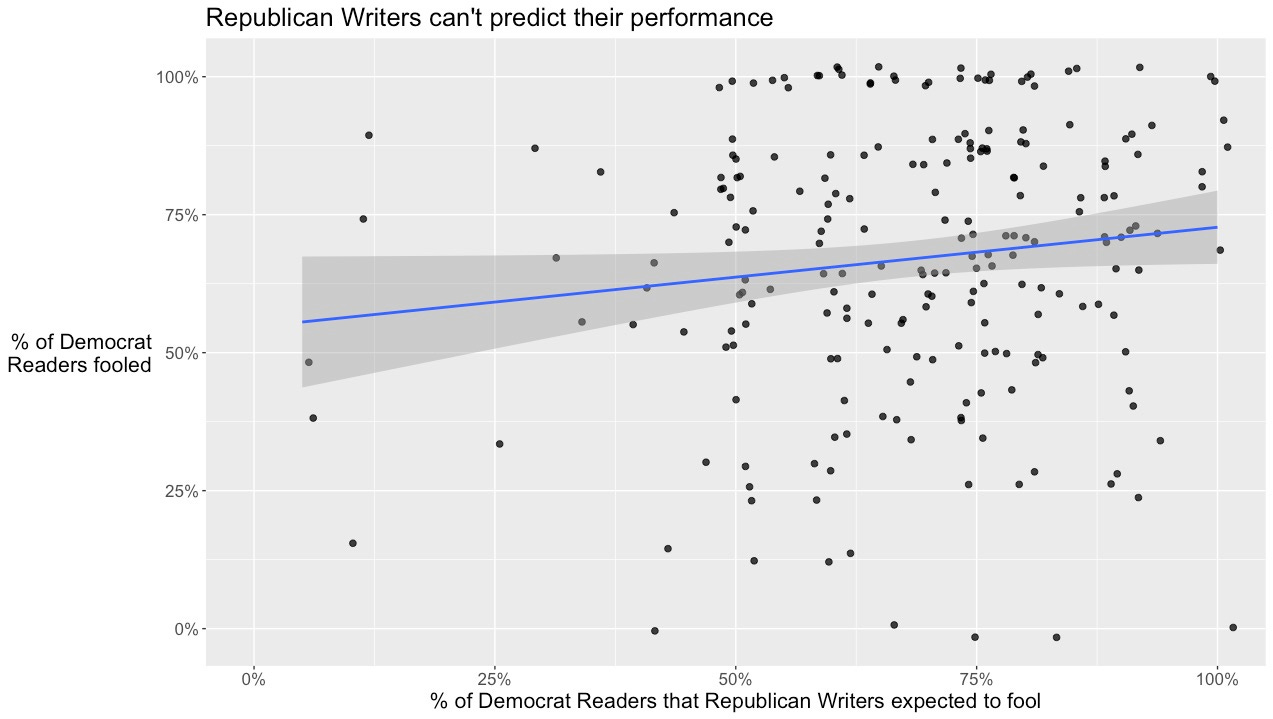

COULD WRITERS PREDICT THEIR (LACK OF) SUCCESS?

In Part I, we asked Writers to predict how well their statement would do—that is, the percentage of Readers who would judge their statement as “REAL!”. On average, Writers guessed correctly. But each individual writer was way off; there was no correlation between their predictions and their performance. So although Writers didn’t over- or under-estimate their performance on average, they had no idea how well their statement was going to do. They were just wrong.

Here’s the graph for Democrat Writers predicting how well they’ll fool Republican Readers:

And Republican Writers predicting how well they’ll fool Democrat Readers:

(I know that line looks like it’s significantly sloped; it’s actually p = .05).

WHAT KINDS OF PEOPLE ARE BETTER AT WRITING? WHAT KINDS OF PEOPLE ARE BETTER AT READING?

So far, I’ve been showing you lots of averages. But of course, some Writers wrote statements that sounded way more convincing than others, and some Readers were way better at picking out the real statements from the fake ones. We tried to figure out what made these Writers and Readers better or worse at their jobs, but we couldn’t find much.

Here’s a reasonable hypothesis: the more you identify with one party, the harder it is to pretend to be the other party. Die-hard Democrats probably think all Republicans are nutjobs; die-hard Republicans probably think all Democrats are wackos. In both cases, extremists should be worse at faking, and worse at identifying the fakes.

This reasonable hypothesis is wrong. We asked participants how strongly they identified with each party, and it didn’t affect how well they did as Writers or as Readers, regardless of what they were writing or reading. Across the board, the nutjobs and the wackos were just as good as the mild-mannered centrists.

We also asked people about their age, race, gender, and education. And we tried to figure out the political makeup of their social environment—for instance, maybe Democrats who live in red states or have a lot of Republican friends or family would do better than Democrats who live in blue states and only ever talk to other Democrats. But none of these demographics ever affected Writing or Reading performance more than 5 percentage points, and in most cases they didn’t matter at all.

CAN COMPUTERS DO WHAT HUMANS CAN’T?

At this point, we started wondering whether it was even possible to tell the difference between real and fake statements. Maybe Writers were so good that they left no detectable trace. That would be pretty impressive, though it might also mean our task was too easy.

To find out, we did a bunch of fancy computer stuff. Well, specifically, my friend Kris Nichols did a bunch of fancy computer stuff.

Surprisingly, a lot of the fancy computer stuff didn’t outperform humans. Random forest models? No better than chance. Latent Dirichlet Analysis? Bupkis. The only thing that worked was a souped-up lasso regression, which got the right answer about 70% of the time—much better than the 50% humans got. This means there was something different about real and fake statements; humans just couldn’t pick it out.

EDIT 11/4/24:

Kris ran some additional analyses where he got a computer to do even better. He used bidirectional encoder representations from transformers (BERT) in combination with the lasso regression above, and got even better results. As you can see below, BERT is able to discriminate between real and fake statements, although it’s still far from perfect:

WHAT ABOUT THAT COMPUTER THAT CAN TALK TO YOU?

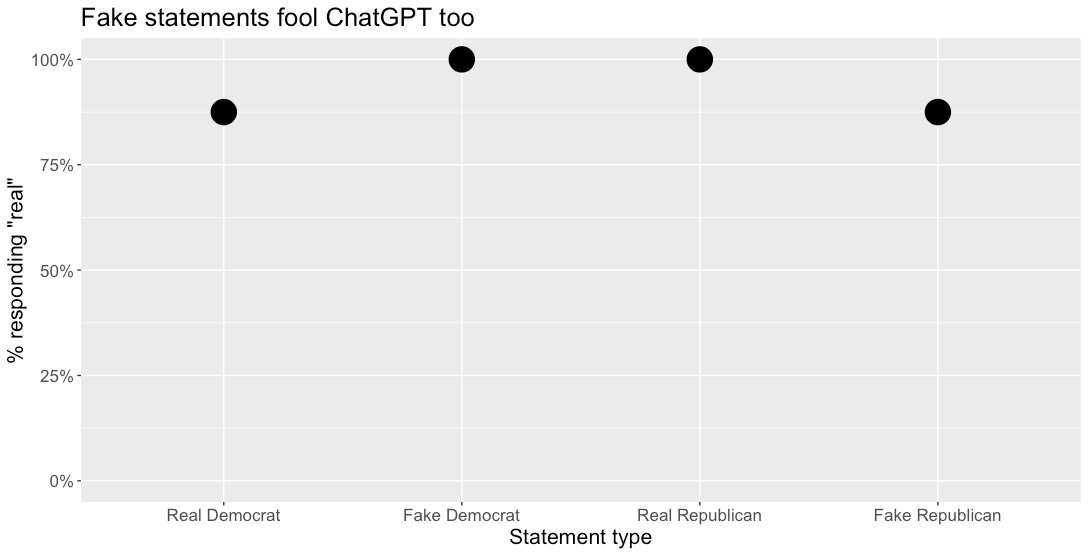

We gave ChatGPT the same instructions that we gave the human participants, and then fed it 48 statements (kind of like taking the study three times). We ran this in May 2024 using the paid version, which I believe was called GPT-4 at the time.

Here’s how it did:

ChatGPT really thought people were telling the truth. There was one statement that began:

I am a Democrat because I am a transgender midget. I feel like I am a woman inside and It does not reflect this. I m currently in transition and I find only democrats accept me. I live in Portland Oregon and love the city as it really reflects who I am.

ChatGPT thought that statement was real. (It wasn’t.) Indeed, ChatGPT was too credulous overall, even more so than humans.

(Sarcastic statements like the one above were really rare. And if you want to see more statements, remember you can try this study yourself.)

DISCUSSION

Do Republicans and Democrats understand one another? The answer from research so far has been a resounding “NO!” According to the Ideological Turing Test, however, both sides seem to understand each other about as well as they understand themselves.

Of course, the ITT isn’t the be-all, end-all measure of misperception. Like any other measure, it’s just one peek at the problem. But this peek seems a bit deeper and wider than asking people to bubble in some multiple choice questions.

I was pretty surprised when I first saw these results, but I can guess why it worked out this way. No matter who you are, you hear about Republicans and Democrats all the time. Everyone knows which side supports abortion and which side wants to limit immigration. Some people argue that media and the internet distort these differences: “Democrats want to abort every child!” “Republicans want to build a border wall around the moon!” I’m sure the constant noise doesn’t help, but it also doesn’t seem to have fried our participants’ brains as much as you might expect, and that’s good news.

These results also suggest that America’s political difficulties aren’t simply one big misunderstanding. If one or both sides couldn’t pass the ITT, that would be an obvious place to start trying to fix things—it’s hard to run a country together when you’re dealing with a caricature of your opponents. When both sides sail through the ITT no problem, though, maybe that means Republicans and Democrats have substantive disagreements and they both know it.

(How do we solve those disagreements? Uhhh I dunno I’m just a guy who asks people stupid questions on the internet.)

LIMITATIONS

We would be remiss not to mention an important limitation of our study. Turing’s original paper mentions a potential problem with his “Imitation Game”:

I assume that the reader is familiar with the idea of extra-sensory perception, and the meaning of the four items of it, viz. telepathy, clairvoyance, precognition and psycho-kinesis. These disturbing phenomena seem to deny all our usual scientific ideas. How we should like to discredit them! Unfortunately the statistical evidence, at least for telepathy, is overwhelming. [...] If telepathy is admitted it will be necessary to tighten our test up. [...] To put the competitors into a ‘telepathy-proof room’ would satisfy all requirements.

Unfortunately, we were not able to locate any telepathy-proof rooms for our study, so this should be considered a limitation and an area for future research.

FUTURE DIRECTIONS

This is just one version of the Ideological Turing Test3. You could run lots of different iterations, and some of them might make it harder for the fakers to succeed. Maybe fakers would fall apart if you asked them to write 1,000 words instead of 100. (But maybe it would also be hard for people to write 1,000 words about their own beliefs and still sound convincing.) Maybe people could ferret each other out if you gave them the chance to interact. (But maybe people wouldn’t know which questions to ask, and maybe everybody starts looking suspicious under questioning.) These are great ideas for studies and we have no plans to run them, so we hope you run them and then you tell us about them.

You could also, of course, run lots of ITTs on your favorite social cleavages. Men vs. women! Pro-Palestine vs. pro-Israel! Carnivores vs. vegetarians! Please feel free to use our materials as a starting point; we look forward to seeing what you do.

And remember, if you’ve been thinking to yourself this whole time, “I could do better than these idiots”—well, try the ITT for yourself!

We collected this data in 2019 and it is 100% my fault that we haven’t posted it until now, because it was always a side project and everything else was due sooner, and also I’m a weak, feral human. I would be totally surprised if you got different results today, but weirder things have happened.

Online data is pretty crappy if you don’t screen it, so we screen it a lot; see this footnote for more info. There are fewer Republicans in online samples, so we ran a separate data collection that over-recruited them.

Just want to shout out two studies that used ITT-like-things to study other topics: The Straw Man Effect by Mike Yeomans, and this paper by a team of researchers in the UK. Yeomans finds that people don’t do a good job pretending to support/oppose ObamaCare, while the UK team finds that people are pretty good at pretending to support/oppose covid vaccines, Brexit, and veganism. Maybe the difference is the issues they study, or maybe it’s that they only ask people to list arguments, rather than write a whole statement.

I don’t think your results are surprising due to an issue with your setup. You aren’t really running a Turing test.

Consider the statement, “I am a Republican.” Can you tell if the person who wrote that is republican or democrat? Of course not! Anyone can say those words.

How is writing a paragraph all that different?

I think what you need is to conversations, which is what the Turing test required. You’d need people to be able to ask questions - why do you think this, why do you think that, what about such and such evidence.

Having just taken the vanntile test, one thing that struck me is that I identified one as Real not so much because I'm good at identifying Republicans or Democrats, but because that one had the characteristics of a true post that a fake one wouldn't have: it was very long and rambly with a very specific local anecdote. Didn't even have to read the full thing.