New paradigm for psychology just dropped

OR: the ideas your mother warned you about

I’ve complained a lot about the state of psychology, but eventually it’s time to stop whining and start building. That time is now.

My mad scientist friends who go by the name Slime Mold Time Mold (yes, really) have just published a book that lays out a new foundation for the science of the mind. It’s called The Mind in the Wheel, and it’s the most provocative thing I’ve read about psychology since I became a psychologist myself—this is probably the first time I’ve felt surprised by something in the field since 2016. It’s maybe right, it’s probably wrong, but there’s something here, something important, and anybody with a mind ought to take these ideas for a spin. I realize some people are skittish about reading books from pseudonymous strangers on the internet—isn’t that what your mom warned you not to do?—but baby, that’s what I’m here for! So let’s go—

THE BOARD GAME OF LIFE, BUT LITERALLY

Lots of people agree that psychology is stuck because it doesn’t have a paradigm, but that’s where the discussion ends. We all pat our pockets and go, “paradigm, paradigm...uh...hmm, I seem to have left mine at home, do you have one?”

Our minds turn to mush at this point because nobody has ever been clear on what a paradigm is. Thomas Kuhn, the guy who coined the term, was famously hard to understand.1 People assumed that “paradigm shift” just meant “a big change” and so they started using the term for everything: “We used to wear baggy jeans, now we wear skinny jeans! Paradigm shift!”

So let’s get clear: a paradigm is made out of units and rules. It says, “the part of the world I’m studying is made up of these entities, which can do these activities.”

In this way, doing science is a lot like reverse-engineering a board game. You have to figure out the units in play, like the tiles in Scrabble or the top hat in Monopoly. And then you have to figure out what those units can and can’t do: you can use your Scrabble tiles to spell “BUDDY” or “TREMBLE”, but not “GORFLBOP”. The top hat can be on Park Place, it can be on B&O Railroad, but it can never inside your left nostril, or else you’re not playing Monopoly anymore.

A paradigm shift is when you make a major revision to the list of units or rules. And indeed, when you look back at the biggest breakthroughs in the history of science, they’re all about units and rules. Darwin’s big idea was that species (units) can change over time (rule). Newton’s big idea was that the rules of gravitation that govern the planets also govern everything down here on Earth. Atomic theory was a proposal about units (all matter is made up of things called atoms) and it came with a lot of rules (“atoms always combine in the same proportions”, “matter can’t be created or destroyed”, etc.). When molecular biologists figured out their “central dogma” in the mid-1900s, they expressed it in terms of units (DNA, RNA, proteins) and what those units can do (DNA makes RNA, RNA makes proteins).

If all this sounds obvious, that’s great. But in the ~150 years that psychology has existed, this is not what we’ve been doing.

THERE ARE THREE KINDS OF RESEARCH, BUT ONLY ONE IS SCIENCE

When you’re making and testing conjectures about units and rules, let’s call that science. It’s easy to do two other things that look like science, but aren’t, and this is unfortunately what a lot of research in psychology is like.

First, we can do studies without any inkling about the units and rules at all. You know, screw around and find out! Just run some experiments, get some numbers, do a few tests! A good word for this is naive research. If you’re asking questions like “Do people look more attractive when they part their hair on the right vs. the left?” or “Does thinking about networking make people want soap?” or “Are people less likely to steal from the communal milk if you print out a picture of human eyes and hang it up in the break room?” you’re doing naive research.2

The name is slightly pejorative, but only slightly, and for good reason. On the one hand, some proportion of your research should be naive, because there’s always a chance you stumble onto something interesting. If you’re locked into a paradigm, naive research may be the only way you discover something that complicates the prevailing view.

On the other hand, you can do naive research forever without making any progress. If you’re trying to figure out how cars work, for instance, you can be like, “Does the car still work if we paint it blue?” *checks* “Okay, does the car still work if we...paint it a slightly lighter shade of blue??”

(As SMTM puts it: “To get to the moon, we didn’t build two groups of rockets and see which group made it to orbit.”)

There’s a second way to do research that’s non-scientific: you make up a bunch of hand-wavy words and then study them. A good name for this is impressionistic research. If you’re studying whether “action-awareness merging” leads to “flow”, or whether students’ “math self-efficacy” mediates the relationship between their “perceived classroom environment” and their scores on a math test, or whether “mindfulness” causes “resilience” by increasing “zest for life”, you are doing impressionistic research.

The problem with this approach is that it gets you tangled up in things that don’t actually exist. What is “zest for life”? It is literally “how you respond to the Zest for Life Scale”. And what does the Zest for Life Scale measure? It measures...zest for life. If you push hard enough on any psychological abstraction, you will eventually find a tautology like this. This is why impressionistic research makes heavy use of statistics: the only way you can claim you’ve discovered anything is to produce a significant p-value.

Naive and impressionistic research are often respectable-looking ways to go nowhere. For example, if you were trying to understand Monopoly using the tools of naive research, you might start by correlating “the number that appears on the dice” with “money earned”. That sounds like a reasonable idea, but you’d end up totally confused—sometimes people get money when they roll higher numbers, but sometimes they roll higher numbers and lose money, and sometimes they gain or lose money without rolling at all. These inconsistent results could spawn academic feuds that play out over decades: “The Monopoly Lab at Johns Hopkins finds that rolling a four is associated with an increase in wealth!” “No, the Monocle Group at UCLA did a preregistered replication and it actually turns out that odd numbers are good, but only if you fit a structural equation model and control for the past ten rolls!”

The impressionistic approach would be even more hopeless. At least dice and dollars are actual parts of the game; if you start studying abstractions like “capitalism proneness” and “top hat-titude”, you can spin your wheels forever. The only way you’ll ever understand Monopoly is by making guesses about the units and rules of the game, and then checking whether your guesses hold up. Otherwise, you might as well insert the top hat directly into your left nostril.

A SIDE NOTE ON NEUROSCIENCE

We’re going to get to psychology in a second, but first we have to avoid a very tempting detour. Whenever I talk to people about the units and rules of psychology, they’re immediately like, “Oh, so you’re saying psychology should be neuroscience. The units are neurons and—”

Lemme stop you right there, because that’s not where we’re going.

Let’s say you’re trying to fix the New York City transit system, so you’re thinking about trains, stations, passengers, etc. All of those things are made of smaller units, but you don’t get better at designing the system by thinking about the smallest units possible. If you start asking questions like, “How do I use a collection of iron atoms to transport a collection of carbon and hydrogen atoms?” you’ll miss the fact that some of those carbon and hydrogen atoms are in the shape of butts that need seats, or that some of them are in the shape of brains that need to be told when the train is arriving.

Those smaller units do matter, because you’re constrained by what they can and can’t do—you can’t build a train that goes faster than the speed of light, and you can’t expect riders to be able to phase through train doors like those two twin ghosts with dreadlocks from the second Matrix movie. But lower-level truths like the Planck constant, the chemical makeup of the human body, the cosmic background radiation of the universe, etc., are not going to help you figure out where to put the elevators in Grand Central, nor will they tell you how often you should run an express train.

Another example for all you computer folks out there: ultimately, all software engineering is just moving electrons around. But imagine how hard your job would be if you could only talk about electrons moving around. No arrays, stacks, nodes, graphs, algorithms—just those lil negatively charged bois and their comings and goings. I don’t know a lot about computers, but I don’t think this would work. Psychology is similar to software; you can’t touch it, but it’s still doing stuff.

So yes, anything you posit at the level of psychology has to be possible at the level of neuroscience. And everything in neuroscience has to be possible at the level of biochemistry, etc., all the way down, and ultimately it’s all at the whims of God. But if you try to reduce any of those levels to be “just” the level below it, you lose all of its useful detail.

That’s why we won’t be talking about neurons or potassium ions or whatever. We’re gonna be talking about thermostats.

A GOOD WAY NOT TO DIE

Here’s the meat of The Mind in the Wheel: the mind is made out of units called control systems.

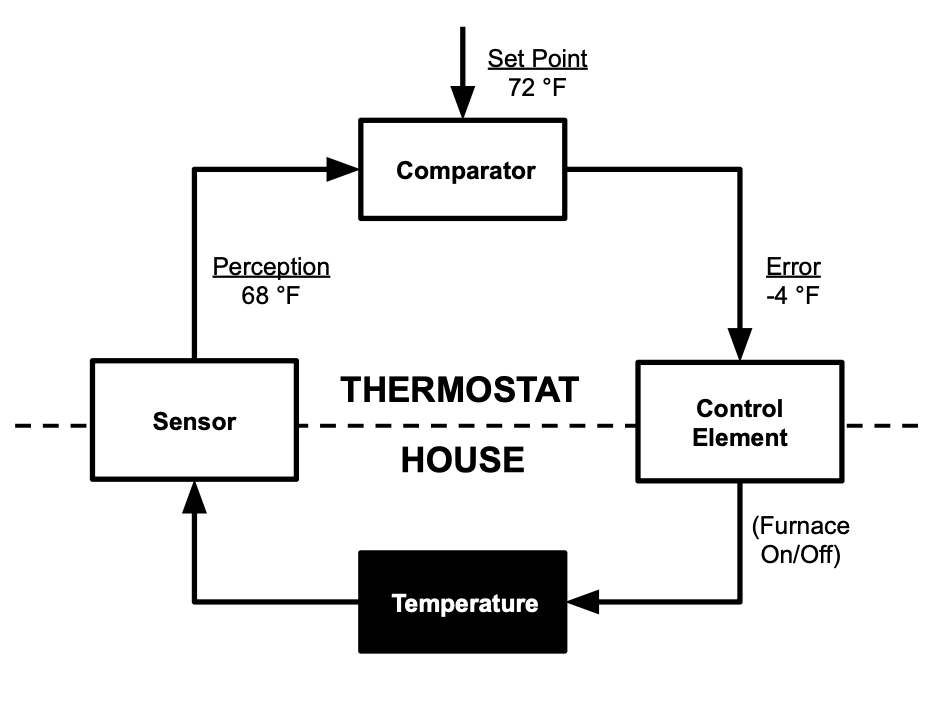

I talked about control systems before in You Can’t Be Too Happy, Literally, but here’s a brief recap. The classic example of a control system is a thermostat. You set a target temperature, and if the thermostat detects a temperature that’s lower than the target, it turns on the heat. If the temperature is higher than the target, it turns on the A/C. The difference between the target temperature and the actual temperature is called the “error”, and it’s the thermostat’s job to minimize it. That’s it! That’s a control system.

It seems likely that the mind contains lots of control systems because they are a really good way not to die. Humans are fragile, and we need to keep lots of different things at just the right level. We can’t be too warm or too cold. We need to eat food, but not too much. We need to be horny sometimes in order to reproduce, but if you’re horny all the time, you run into troubles of a different sort.

The science of control systems is called cybernetics, so let’s call this approach cybernetic psychology. It proposes that the mind is a stack of control systems, each responsible for monitoring one of these necessities. The units are the control systems themselves and their components, and the rules are the way those systems operate. Like a thermostat, they monitor some variable out in the world, compare it to the target level of that variable, and then act to reduce the difference between the two. For simplicity, we can refer to this error-reduction component as the “governor”. Unlike a simple thermostat, however, governors are both reactive and predictive—they try to reduce errors that have occurred, and they try to prevent those errors from occurring in the first place.

Every control system has a target level, and they each produce errors when they’re above or below that target. In cybernetic psychology, we call those errors “emotions”. So hunger is the error signal from the Nutrition Control System, pain is the error signal from the Body Damage Prevention System, and loneliness is the error signal from the Make Sure You Spend Time with Other People System.

(I’m making these names up; we don’t yet know how many systems there are, or what they control.)

Some of these emotions will probably correspond to words that people already use, but some won’t. For instance, “need to pee” will probably turn out to be an emotion, because it seems to be the error signal from some kind of Urine Control System. “Hunger” will probably turn out to be several emotions, each one driving us to consume a different macronutrient, or maybe a different texture or taste, who knows. If one of those drives is specifically for sugar, it would explain why people mysteriously have room for dessert after eating everything else: the Protein/Carbs/Fiber/etc. Control Systems are all satisfied, but the Sugar Control System is not.

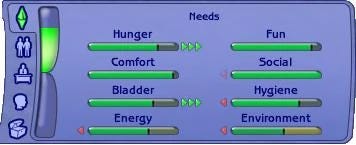

The Sims actually did a reasonable job of identifying some of these drives:

I worry that all of this is sounding too normal so far, so let’s get weirder.

In cybernetic psychology, “happiness” is not an emotion, because it’s not an error from a control system. Instead, happiness is the result of correcting errors. As SMTM puts it, “Happiness is what happens when a thirsty person drinks, when a tired person rests, when a frightened person reaches safety.” It’s kind of like getting $200 for passing “Go” in Monopoly.

I’ll return to this later because I’ve got a bone to pick with it, but for now I just want to point out that “emotion” means something different in cybernetics than it does in common parlance, and that’s on purpose, because repurposing words is a natural part of paradigm-shifting. (In Aristotelian physics, for instance, “motion” means something different. If your face turns red, it is undergoing “motion” in terms of color.) When you get too familiar with the words you’re using, you forget that each one is packed with assumptions—assumptions that might be wrong, but you’ll never know unless you bust ‘em open like a piñata.

‘ELLO GUVNA

Okay, if the mind is made out of these cybernetic systems and their governors, what we really want to know is: how many are there? How do they work?

We’re not doing impressionistic research here, so we can’t just create control systems by fiat, the way you can create “zest for life” by creating a Zest for Life Scale. Instead, discovering the drives requires a new set of methodologies. You might start by noticing that people seem inexplicably driven to do some things (like play Candy Crush) or inexplicably not driven to do other things (like drink lemon juice when they’re suffering from scurvy, even though it would save their life). This could give you an inkling of what kind of drives exist. Then you could try to isolate one of those drives through methods like:

Prevention: If you stop someone from playing Candy Crush, what do they do instead?

Knockout: If you turn off the elements of Candy Crush one at a time—make it black and white, eliminate the scoring system, etc.—at what point do they no longer want to play?

Behavioral exhaustion (knockout in reverse): If you give people one component of Candy Crush at a time—maybe, categorizing things, earning points, seeing lots of colors, etc.—and let them do that as much as they want, do they still want to play Candy Crush afterward?

(See the methods sections for more).

With a few notable exceptions, you can pretty much only do one thing at a time, and each governor has a different opinion on what that thing should be. So unlike the thermostat in your house, which doesn’t have to contend with any other control systems, all of the governors of the mind have to fight with each other constantly. While we’re discovering the drives, then, we also have to figure out how the governors jockey for the right to choose behaviors.

For example, the Oxygen Governor can get a lot of votes really fast; no matter how cold, hungry, or lonely you are, you’ll always attend to your lack of air first. The Pain Governor can be overridden at low error levels (“my ankle kinda hurts but I have to finish this 5k”) but it gets a lot of sway at high error levels (“my ankle hurts so much I literally can’t walk on it”). Meanwhile, people can be really lonely for a long time without doing much about it, suggesting that the Loneliness Governor tops out at relatively few votes, or that it has a harder time figuring out what to vote for.

From the get go, this raises a lot of questions. What are the governors governing—is the Loneliness Governor paying attention to something like eye contact or number of words spoken, or is it monitoring some kind of super abstract measure of socialization that we can’t even imagine yet? What happens when two governors are deadlocked? What happens when the vote is really close, is there a mental equivalent of a runoff election? And how do these governors “learn” what things to vote for? No one knows yet, but we’d like to!

ACT NOW AND WE’LL THROW IN PERSONALITY AND PSYCHOPATHOLOGY FOR FREE

Here’s where cybernetics really pops off: if you’re on board so far, you’ve already got a theory of personality and psychopathology.

If the mind is made out of control systems, and those control systems have different set points (that is, their target level) and sensitivities (that is, how hard they fight to maintain that target level), then “personality” is just how those set points and sensitivities differ from person to person. Someone who is more “extraverted”, for example, has a higher set point and/or greater sensitivity on their Sociality Control System (if such a thing exists). As in, they get an error if they don’t maintain a higher level of social interaction, or they respond to that error faster than other people do.

This is a major upgrade to how we think about personality. Right now, what is personality? If you corner a personality psychologist, they’ll tell you something like “traits and characteristics that are stable across time and situations”. Okay, but what’s a trait? What’s a characteristic? Push harder, and you’ll eventually discover that what we call “personality” is really “how you bubble things in on a personality test”. There are no units here, no rules, no theory about the underlying system and how it works. That’s why our best theory of personality performs about as well as the Enneagram, a theory that somebody just made up.

But that’s not all—cybernetics also gives you a systematic way of thinking about mental illness. When you lay out all the parts of a control system, you’ll realize that there are lots of ways it can break down, and each malfunction causes a different kind of pathology.

For instance, if you snip that line labeled “error”, you knock out almost the entire control system. There’s no voting, no behavior, and no happiness generated. You just sit there feeling nothing, which certainly sounds like a kind of depression. On the other hand, if all of your errors get turned way up, then you get tons of voting, lots of behavior, and—if your behavior is successful—lots of happiness. That sounds like mania. (And so on.)

This is how units-and-rules thinking can get you farther than naive or impressionistic research. Right now, we describe mental disorders based on symptoms. Like: “You feel depressed because you feel sad.” We have no theory about the underlying system that causes the depression. Instead, we produce charts filled with abstractions, like this:

Imagine how hopeless we would be if we approached medicine this way, lumping together both Black Lung and the common cold as “coughing diseases”, even though the treatment for one of them is “bed rest and fluids” and the treatment for the other one is “get a different job”. This is, unfortunately, about the best we can do with a symptoms-based approach—maybe we’ll rearrange the chart as our statistical techniques get better, but we’ll never, ever cure depression. This is why we need a blueprint instead of a list: if we can trace a malfunction back to the part that broke, maybe we can fix it.

When we’re doing that, we’ll probably discover that what we think of as one thing is actually many things. “Depression”, for instance, may in fact be 17 different disorders. I mean, c’mon, one symptom of “depression” is sleeping too much, and another is sleeping too little. One symptom is weight gain; another is weight loss. Some people with depression feel extremely sad; others feel nothing. It’s crazy that we use one word to describe all of these syndromes, and it probably explains why we’re not very good at treating them.

BREATHING IS VERY IMPORTANT, I WOULD ALWAYS LIKE TO BREATHE

I think there’s a lot of promise here, but now let me attack this idea a little bit, so you can see how disputes work differently when we’re working inside a paradigm.

To SMTM, happiness is not an emotion because it isn’t an error signal. Instead, it’s the thing you get for correcting an error signal. Eat a burrito when you’re hungry = happiness. Talk to a friend when you’re lonely = happiness. SMTM suspect that happiness operates like an overall guide for explore/exploit: when you got a lot of happiness in the tank, keep doing the things you’re doing. When you’re low, change it up.

I think there’s something missing here. When you really gotta pee and you finally make it to a bathroom, that feels good. When you study all month for a big exam and then you get a 97%, that feels good too. But they feel like different kinds of good. The first kind is intense but fleeting; the second is more of a slow burn that could last for a whole day. I don’t see how you accomplish this with one common “pot” of happiness.

Or have you ever been underwater for a little too long? When you finally reach the surface, I guess it feels “good” to breathe again, but once you catch your breath, it’s not like you feel ecstatic. You feel more like, I dunno, what’s the emotion for “BREATHING IS VERY IMPORTANT, I WOULD ALWAYS LIKE TO BREATHE”?

I see two ways to solve this problem. One is to allow for several types of positive signals, so not all error correction gets dumped into “happiness”. Maybe there’s a separate bucket called “relief” that fills up when you correct dangerous errors like pain or suffocation. Unlike happiness, which is meant to encourage more of the same behaviors, relief might be a signal to do less of something.

Another solution is to allow for different governors to have different ratios between error correction and happiness generation. Right now we’re assuming that every unit of error that you correct becomes a unit of happiness gained. Let’s say that you’re really hungry, and your Hunger Governor is like “I GIVE THIS A -50!” and then you eat dinner and not only does your -50 go away, but you also get +50 happiness, that kind of feeling where you pat your belly and go “Now that’s good eatin’!”. (That’s my experience, anyway.) But maybe other governors work differently. If you feel like you’re drowning, your Oxygen Governor is like “I GIVE THIS A -1000!”. When you can breathe again, though, maybe you only get the -1000 to go away, and you don’t get any happiness on top of that. You feel much better than you did before, but you don’t feel good. You don’t pat your lungs and go, “Now that’s good breathin’!”

Ultimately, the way to test these ideas would be to build something. In this case, you’d start by building something like a Sim. If you program a lil computer dude with all of our conjectured control systems, does it act like a human does? Or does it keep half-drowning itself so it can get the pleasure of breathing again? Even better, if you build your Sim and it looks humanlike, can you then adjust the parameters to make it half-drown itself? After all, most people do not get their jollies from starving themselves of oxygen, but a few do3, so we ought to be able to explain even rare and pathological behavior by poking and prodding the underlying systems. I don’t think any of this would be easy, but unlike impressionistic research, it least has a chance of being productive.

CAN’T WAIT TO BE A CARCASS

So far, I’ve been talking like this cybernetics thing is mostly right. To be clear, I expect this to be mostly wrong. This might end up being a totally boneheaded way to think about psychology. That’s fine! The point of a paradigm is to be wrong in the right direction.

The philosopher of science Karl Popper famously said that real science is falsifiable. I think he didn’t go far enough. Real science is overturnable. That is, before something is worth refuting, it has to be worth believing. “I have two heads” is falsifiable, but you’d be wasting your time falsifying it. First we need to stake out some clear theoretical commitments, some strong models that at least some of us are willing to believe, and only then can we go to town trying to falsify them. Cunningham’s Law states, “The best way to get the right answer on the Internet is not to ask a question; it’s to post the wrong answer.” Science works the same way, and the bolder we can make our wrong answers, the better our right answers will be.

This has certainly been true in our history. The last time psychology made a great leap forward was when behaviorism went bust. Say what you will about John Watson and B.F. Skinner, but at least they believed in something. Their ideas were so strong and so specific, in fact, that a whole generation of scientists launched their careers by proving those ideas wrong.4 This is what it looks like to be wrong in the right direction: when your paradigm eventually falls, it sinks to the bottom of the ocean like a dead whale and a whole ecosystem grows up around its carcass.

When we killed behaviorism, though, we did not replace it with a set of equally overturnable beliefs. Instead, we kinda decided that anything goes. If you want to study whether people remember circles better than squares, or whether taller people are also more aggressive, or whether babies can tell time, that’s all fine, as long as you can put up some significant p-values.5 The result has been a decades-long buildup of findings, and each one has gone into its own cubbyhole. We sometimes call these things “theories” or “models”, but when you look closely, you don’t see a description of a system, but a way of squishing some findings together. Like this:

This is what impressionistic research looks like. You can shoehorn pretty much anything into a picture like that, and then you can argue for the rest of your career with people who prefer a different picture. Or, more often, everyone can make their own pictures, add ‘em to the pile, and then we all move on. Nothing gets overturned, only forgotten.

When you work with units and rules, it looks more like this:

It’s not that we should use boxes and lines instead of rainbows. It’s that the boxes and lines should mean something. This diagram claims that the “primary rate gyro package”, whatever that might be, is a critical component of the system. Without it, the “attitude control electronics” wouldn’t know what to do. If you remove any of those boxes and the system still works, you know you got the diagram wrong. (Of course, if you zoom into any of those blocks, you’ll find that each of them contains its own world of units; it’s units all the way down.) This is very different from the kinds of boxes and lines we produce right now, which contain a mishmash of statistics and abstractions:

When you’re doing impressionistic research like that, you can accommodate anything. That’s what I often find when I talk to psychologists about units and rules—they’ll light up and go, “Oh yes! I already do that!” And then they’ll describe something that is definitely not that. Like, “I study attention, and I find that people pay more attention when you make the room cold!” But...what is attention? Where does it go on the blueprint? The fact that we have a noun or a verb for something does not mean that it’s a unit or a rule. Until you know what board game you’re playing, you’re stuck describing things in terms of behaviors, symptoms, and abstractions.6

So, look. I do suspect that key pieces of the mind run on control systems. I also suspect that much of the mind has nothing to do with control systems at all. Language, memory, sensation—these processes might interface with control systems, but they themselves may not be cybernetic. In fact, cybernetic and non-cybernetic may turn out to be an important distinction in psychology. It would certainly make a lot more sense than dividing things into cognitive, social, developmental, clinical, etc., the way we do right now. Those divisions are given by the dean, not by nature.

But really, I like cybernetic psychology because it stands a chance of becoming overturnable. And I’d love to see it overturned! We’d learn a lot in the process, the same way overturning a rock in the woods reveals a whole new world of grubs ‘n’ worms ‘n’ things. I’d love to see other overturnable approaches, too, other paradigms that propose different universes of units and rules. If you hate control systems, that’s fine, what else you got? I like how cybernetics has unexpected implications for learning, animal welfare, and artificial intelligence, that’s fun for me, that tickles the underside of my brain, so if your paradigm also connects things that otherwise seem to have nothing to do with each other, please, tickle away!7

In a healthy scientific ecosystem, this kind of thing would be happening all the time. We’d have lots of little eddies and enclaves of people doing speculative work, and they’d grow in proportion to their success at explaining the universe. Alas, that’s not the world we have, but it’s the one we ought to build. If only we had more Zest for Life!

In his defense, Kuhn didn’t expect his book to blow up like it did, and so what he published was basically a rough draft of the idea. He spent the rest of his career trying to counter people’s misperceptions and critiques, but this didn’t really clear anything up for reasons that will be understandable to anyone who has gone several rounds with a peer reviewer.

It’s worth noting that two of these findings (the “networking makes you feel dirty” effect and the “eyes in the break room make you steal less milk” effect) have been the subject of several failed replications. The networking study was done by a researcher credibly suspected of fraud, but even if there wasn’t foul play, we should expect the results of naive research to be flimsy. If you have no idea how the underlying system works, then you have no idea why your effect occurred or how to get it again. The methods section of your paper is supposed to include all of the details necessary to replicate your effect, but this is of course a joke, because in psychology nobody knows which details are necessary to replicate their effects.

Apparently some folks even like to hold their pee for a long time so they can achieve a pee-gasm, so we should be able to model this as well. (I promise that link is as safe for work as it could be.)

Noam Chomsky, for instance, got famous for pointing out that behaviorism could not explain how kids acquire language. William Powers, the guy who first tried to apply cybernetics to psychology in the 1970s, was still beating up on behaviorism, decades after it had been dethroned. (Powers’ ideas were hot for a second and then went dormant for 50 years and no one knows why.)

Note that judgment and decision making, Prospect Theory, heuristics and biases, etc.—which are perhaps psychology’s greatest success since the fall of behaviorism—are themselves an overturning of expected utility theory.

I’ve now run into a few psychologists who are certain that their corner of the field has this nailed down, but whenever they lay out their theory, this is always the thing missing—there’s nothing left to fill in, nothing that unifies things we would intuitively see as separate, or separates things we would intuitively see as unified. But look, I err on the side of being too cynical. If you’ve got this figured out, great! Please do the rest of psychology next.

This is the most common failure mode for The Mind in the Wheel, but there are two others I’ve encountered. Some people think it’s too old: they’ll go, “Oh, this is just...” and then they’ll name some old-timey research that kinda sorta bears a resemblance and assume that settles things. Or they’ll think it’s too new: “Things are mostly fine right now, so why listen to these internet weirdos?” I find this usually breaks down by age—old people want to dismiss it, young people want to understand it. And hey, maybe the old timers will ultimately be proven right, but they’re definitely wrong to feel so confident about it, because no one knows how this will pan out. I always find it surprising when I meet someone whose job is to make knowledge and yet they seem really really invested in not thinking anything different from whatever they think right now.

I hope to live to see the day of "Inside Out 3" where the Make Sure You Spend Time with Other People System is broken and needs fixing.

I did a quick ctrl-f through the first few chapters of the book, and Norbert Wiener is mentioned just a couple times, and only in the context of his original work. I think that's a serious omission, given that Wiener wasn't just the coiner of the terms "cybernetics" and "control systems", but spent a huge amount of effort relating that to psychology and psychological issues.

From ChatGPT's summary: "Wiener's Cybernetics: Or Control and Communication in the Animal and the Machine (1948) extends feedback analysis to the nervous system and to psychiatric disorders (e.g., schizophrenia as a breakdown of feedback)."

Ignoring this part of Wiener's work means that SMTM also ignored all the work that followed after it, like Karl Friston's work (zero mentions in SMTM as far as I can tell), which spends a lot of time discussing happiness in the context of control systems. Again, from ChatGPT: " In “What is Mood? A Computational Perspective” (Clark, Watson & Friston 2018) , mood = a hyper-prior over expected precision; momentary happiness corresponds to downward shifts in expected surprise."

I'd really want an explanation from SMTM why they omitted this, because, at the very least, this is a serious failure to engage with the literature.